Introduction

Magnetico is a the self-hosted BitTorrent DHT crawler, with built-in searching capabilities.

Usually, to avoid bootstrapping database and crawl Internet from zero on your own, you can download a community dump (like @anindyamaiti’s here), and let Magnetico start from here.

But these dumps are usually proposed as SQLite databases (see this thread), because original Magnetico implementation didn’t support any other source.

Some years ago, there was indeed magnetico-go-migrator that had been developed for this very purpose, but it’s written in Go the project doesn’t seem to exist anymore… There is also magnetico_merge, but I wanted a stable and efficient solution (i.e. without hard-coded SQL schema and not written in Python either).

In this blog post, I’ll explain how I migrated such an SQLite database dump to PostgreSQL, which now Magnetico uses as its regular database, using PGLoader (because such a migration isn’t a straightforward process at all).

Initial setup

First we need to install some packages :

apt install -y icu-devtools pgloader sqlite3I consider Magnetico service directory tree to look somehow like this :

.

├── data

│ ├── database.sqlite3

│ ├── database.sqlite3-shm

│ └── database.sqlite3-wal

└── docker-compose.ymlPGLoader is broken (when it comes to SQLite)

Despite being pretty actively maintained, PGLoader still counts an incredible amount of opened issues. One that I’ve been struggling with is #1256, which causes PGLoader to fail to correctly “transpile” SQLite REFERENCES constraints :

ERROR PostgreSQL Database error 42601: syntax error at or near ")"

QUERY: ALTER TABLE "files" ADD FOREIGN KEY() REFERENCES "torrents"() ON UPDATE RESTRICT ON DELETE CASCADE… which, as you can see, comes from Magnetico schema :

sqlite3 -readonly data/database.sqlite3 ".schema files"CREATE TABLE files (

id INTEGER PRIMARY KEY,

torrent_id INTEGER REFERENCES torrents ON DELETE CASCADE ON UPDATE RESTRICT,

size INTEGER NOT NULL,

path TEXT NOT NULL

, is_readme INTEGER CHECK (is_readme IS NULL OR is_readme=1) DEFAULT NULL, content TEXT CHECK ((content IS NULL AND is_readme IS NULL) OR (content IS NOT NULL AND is_readme=1)) DEFAULT NULL);

CREATE UNIQUE INDEX readme_index ON files (torrent_id, is_readme);So if we wrap this up, a naive approach (or rather “an approach that I’ve tried for you” ![]() ) would be to execute a PGLoader command derived from upstream documentation :

) would be to execute a PGLoader command derived from upstream documentation :

LOAD database

from sqlite://data/database.sqlite3

into postgres://magnetico@unix:run/postgresql:5432/magnetico

WITH include drop, create tables, create indexes, reset sequences,

quote identifiers,

on error resume next,

prefetch rows = 10000

SET work_mem to '16 MB',

maintenance_work_mem to '512 MB'

AFTER LOAD DO

$$ CREATE EXTENSION IF NOT EXISTS pg_trgm; $$,

$$ ALTER TABLE files ADD FOREIGN KEY(torrent_id) REFERENCES torrents ON UPDATE RESTRICT ON DELETE CASCADE; $$;You should have noticed prefetch rows option (which defaults to 100000) that I had to lower as, if you don’t have a whole datacenter at your disposal either, it leads to heap memory exhaustion.

Later, I’ve also hit another PGLoader “transpilation” issue, this time related to SQLite

PRIMARY KEY… Long story short : this is an SQL nightmare.

But anyway, this PGLoader command fails pretty hard and quick, because of…

Encoding issues… as always

BitTorrent DHT actually contains lots of garbage, including invalid UTF-8 character sequences.

PostgreSQL enforces strict character encoding checks, which prevents us from directly importing TEXT columns (torrents.name and files.path) from SQLite. Moreover, it stops current table processing when it encounters an error (including an encoding one), and PGLoader doesn’t continue with the remaining rows afterward.

So I decided to adapt @Crystalix007’s solution for cleaning the SQLite dump from invalid character sequences, without the major drawback of duplicating it on disk multiple times (which by the way additionally lead to database corruption in my case…).

The final recipe

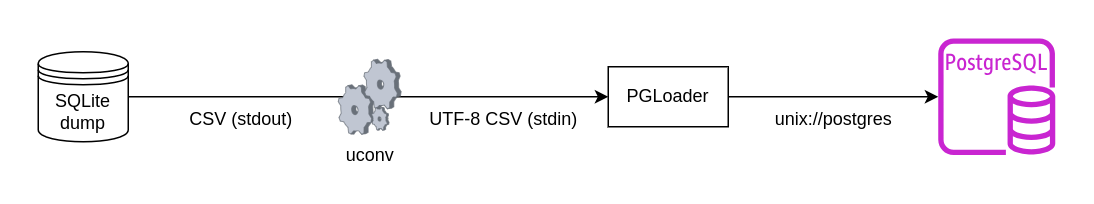

So the idea here is to walk away from PGLoader SQLite connector and let Magnetico create a clean database schema. Then we dump SQLite database tables as CSV streamed through uconv (in order to skip invalid UTF-8 character sequences, which spares us some useless gigabytes by the way ![]() ) down to PostgreSQL database thanks to PGLoader :

) down to PostgreSQL database thanks to PGLoader :

As CSV is a text format, special care must be taken when importing torrents.info_hash, which is an SQLite BLOB column (containing raw bytes). For this we leverage PostgreSQL’s bytea hex format and encode those bytes on-the-fly as hexadecimal.

The final steps

Bootstrap Magnetico

Extend Magnetico Compose stack with a PostgreSQL service, as below :

version: "2"

services:

magnetico:

image: ghcr.io/tgragnato/magnetico:latest

restart: always

ports:

- "127.0.0.1:8080:8080"

command:

- "--database=postgres://magnetico:password@postgres:5432/magnetico?sslmode=disable"

- "--max-rps=500"

- "--addr=0.0.0.0:8080"

depends_on:

- postgres

postgres:

image: docker.io/postgres:18-alpine

restart: always

shm_size: 128mb

environment:

POSTGRES_USER: "magnetico"

POSTGRES_PASSWORD: "password"

POSTGRES_DB: "magnetico"

volumes:

- ./data/postgres:/var/lib/postgresql

# required at first PostgreSQL start, to enable 'pg_trm' extension

- ./load_trm.sql:/docker-entrypoint-initdb.d/load_trm.sql:ro

# allow PGLoader to connect to PostgreSQL using UNIX domain socket, for maximum performance

- ./run:/var/run… and then run the following commands :

mkdir -p data/postgres/ run/

echo "CREATE EXTENSION IF NOT EXISTS pg_trgm;" > load_trm.sql

podman-compose up -d && podman-compose logs -f magnetico

# press CTRL+C when you see "magnetico is ready to serve on [...]!" log message !

# stop Magnetico service (only)

podman-compose stop magneticoRun migration

You’ll have to prepare a PGLoader command file (load_table.pgl), which imports CSV content read from stdin into PostgreSQL TARGET_TABLE :

LOAD CSV

FROM stdin

INTO postgres://magnetico@unix:run/postgresql:5432/magnetico

TARGET TABLE '{{ TARGET_TABLE }}'

WITH disable triggers,

fields optionally enclosed by '"',

fields escaped by double-quote,

fields terminated by ','

SET work_mem to '32 MB',

maintenance_work_mem to '512 MB'

BEFORE LOAD DO

$$ TRUNCATE TABLE "{{ TARGET_TABLE }}" CASCADE; $$,

$$ UPDATE pg_index SET indisready=false WHERE indrelid = (SELECT oid FROM pg_class WHERE relname = '{{ TARGET_TABLE }}'); $$

AFTER LOAD DO

$$ UPDATE pg_index SET indisready=true WHERE indrelid = (SELECT oid FROM pg_class WHERE relname = '{{ TARGET_TABLE }}'); $$,

$$ SELECT setval('seq_{{ TARGET_TABLE }}_id', (SELECT MAX(id) FROM "{{ TARGET_TABLE }}")); $$;It turns out PGLoader doesn’t specify CASCADE when truncating target table, so we must do it ourselves as import prelude.

For performance purpose, we disable all TARGET_TABLE indexes during import (following @fle’s trick) and trigger whole database re-indexation afterwards (see below).

This is a(nother) workaround as PGLoader isn’t able to drop indexes (before reconstructing them at the end) when some SQL constraints depend on them.

You can then execute this Bash script (import_db.sh) :

#!/usr/bin/env bash

set -euo pipefail

# 1. Import 'torrents' table as CSV (with hex-encoded 'info_hash' column and without invalid UTF-8 characters)

sqlite3 -readonly data/database.sqlite3 -csv \

"SELECT id, '\x'||hex(info_hash), name, total_size, discovered_on, updated_on, n_seeders, n_leechers, modified_on FROM torrents;" \

| uconv --callback skip -t utf8 \

| TARGET_TABLE="torrents" pgloader -v load_table.pgl

# 2. Import 'files' table as CSV (without invalid UTF-8 characters)

sqlite3 -readonly data/database.sqlite3 -csv \

"SELECT * FROM files;" \

| uconv --callback skip -t utf8 \

| TARGET_TABLE="files" pgloader -v load_table.pgl

# 3. Trigger database complete re-indexation

podman-compose exec postgres \

psql -U magnetico -c 'REINDEX DATABASE magnetico;'Restart Magnetico

Once database import is done, you may simplify your postgres Compose service definition and restart services :

postgres:

image: docker.io/postgres:18-alpine

restart: always

shm_size: 128mb

environment:

POSTGRES_USER: "magnetico"

POSTGRES_PASSWORD: "password"

POSTGRES_DB: "magnetico"

volumes:

- ./data/postgres:/var/lib/postgresql

- - ./load_trm.sql:/docker-entrypoint-initdb.d/load_trm.sql:ro

- - ./run:/var/runpodman-compose up -dIf you’re satisfied with the imported dataset you can clean all of our mess (as well as old SQLite database dump) :

rm -rf \

data/database.sqlite3{,-shm,-wal} \

run/ \

load_table.pgl \

import_db.sh \

/tmp/pgloader

apt autoremove --purge icu-devtools pgloader sqlite3Conclusion

Migration took around ~12 hours on my setup (~30M torrents with ~950M files), but it took me more than a week to completely figure out the process and tidy it up ![]()

‘hope it helped and that one day most of Magnetico users will eventually drop SQLite, preferring PostgreSQL (custom archive) dumps.

Don’t forget to share with the world… tracker-free ![]()